Predictive Analytics for TRL Assessment

Predictive analytics speeds and standardizes TRL assessments by automating evidence review, reducing bias, and improving investment and research decisions.

Predictive Analytics for TRL Assessment

Predictive analytics is changing how we evaluate technology readiness. By combining data-driven models with structured frameworks like Technology Readiness Levels (TRL), we can now assess technology maturity faster, more consistently, and with fewer biases. This method is especially useful in fields like artificial intelligence, biotechnology, and renewable energy, where development is complex and time-sensitive.

Key takeaways:

- What is TRL? A 9-stage framework used to measure technology maturity, from basic research (TRL 1) to fully deployed systems (TRL 9).

- Why it matters: TRL helps researchers, investors, and organizations decide when a technology is ready for the next step, reducing risks and improving resource allocation.

- Role of predictive analytics: AI models analyze data like test results, regulatory milestones, and publication trends to automate and improve TRL assessments.

- Benefits: Faster evaluations, reduced human bias, and better resource management for deeptech projects.

Predictive tools, like Innovation Lens, are already transforming industries by identifying promising technologies and streamlining decision-making. However, challenges like data quality in early research stages remain. The future lies in combining predictive models with expert oversight for more reliable and actionable insights.

Using Technology Readiness Level (TRL) to Evaluate Technology Risks

How Predictive Analytics Improves TRL Assessments

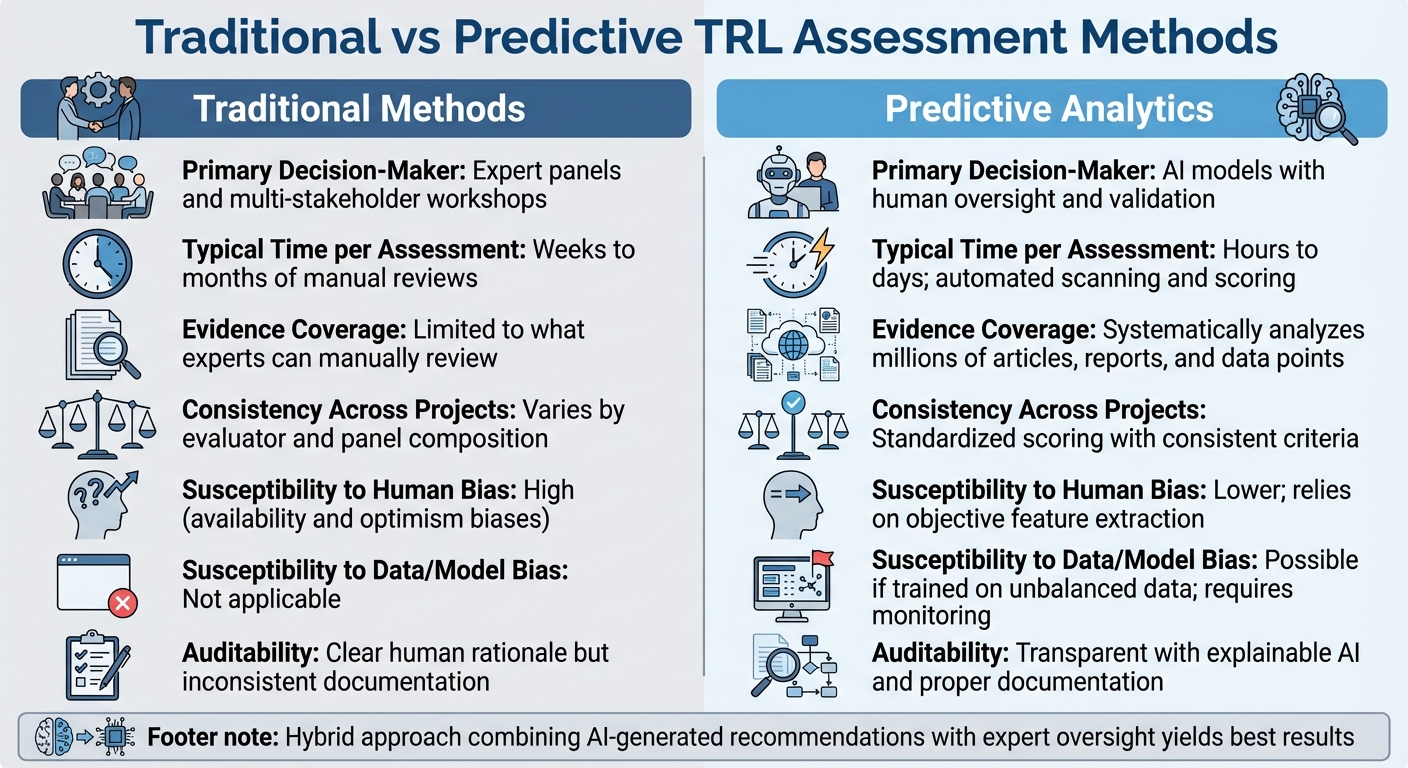

Traditional vs Predictive Analytics TRL Assessment Methods Comparison

Predictive analytics transforms the often slow and subjective process of Technology Readiness Level (TRL) evaluations into something faster and more scalable. Traditionally, TRL assessments rely on expert panels who manually review qualitative evidence against established criteria. While thorough, this approach can take weeks or even months, and the results often vary depending on the evaluators. Predictive analytics changes the game by automating evidence review, pulling relevant maturity signals from a wide range of data sources. This automation not only speeds up the process but also ensures consistency, enabling organizations to evaluate hundreds of technologies at once - a critical advantage for managing the vast number of U.S. deeptech projects.

Another major benefit of predictive analytics is its ability to reduce hidden biases and misclassification risks. Manual reviews are prone to biases like availability bias (relying on easily accessible information) and optimism bias (overestimating a technology's readiness). These biases can lead to technologies being either over- or under-estimated in terms of their maturity. By converting unstructured evidence into measurable indicators - such as validated use cases or regulatory milestones - AI models can uncover patterns that might escape human reviewers. For instance, natural language processing (NLP) can analyze PubMed articles to determine whether a medical AI system has reached TRL 5–7 (relevant environment testing) or TRL 8–9 (operational deployment). This capability is especially valuable for deeptech investors and grantmakers who need to allocate resources wisely to the most promising technologies.

AI Models for TRL Predictions

AI models use tools like neural networks, gradient boosting, and natural language processing to match scientific and technical signals with specific TRL stages. These systems are trained on historical data, learning how factors such as publication frequency, citation trends, collaboration networks, benchmark results, and reported deployments align with levels of readiness. For example, transformer-based language models can analyze arXiv papers and patent filings to track when a concept moves from basic research (TRL 1–2) to proof-of-concept and prototyping stages (TRL 3–6). This involves identifying shifts in language around experimental validation, system integration, and performance metrics.

An AI-powered TRL assessment tool for clinical AI software highlights how this works in practice. Using retrieval-augmented generation, the tool systematically identifies missing or ambiguous evidence that manual reviews might overlook. It not only flags evidence gaps but also accounts for the regulatory, ethical, and technical complexities involved in clinical deployment. This reduces the need for lengthy multi-stakeholder meetings and significantly shortens review timelines[3].

Predictive systems also adapt to different types of technologies - whether hardware, software, or machine learning (ML) - each of which has unique readiness characteristics. For instance, NASA and U.S. defense agencies have separate criteria for hardware (such as lab breadboards and environmental tests) and software (like integrated systems in relevant environments). Machine learning introduces its own considerations, including data quality, model robustness, and monitoring practices. Frameworks such as Machine Learning Technology Readiness Levels (MLTRL) address these factors. Predictive TRL systems incorporate features tailored to each domain: for ML and software, this might include test coverage, deployment logs, and model performance stability; for hardware, it could involve environmental test results and manufacturing readiness. Organizations can train specialized models for each domain to ensure accurate, context-aware predictions instead of forcing all technologies into a one-size-fits-all framework.

These AI-driven methods provide a strong foundation for comparing predictive analytics with traditional TRL assessments.

Comparing Traditional and Predictive TRL Assessment Methods

The differences between manual and predictive TRL evaluations are clear across several dimensions. Traditional methods rely heavily on expert judgment, which provides depth but often lacks speed and consistency. Predictive analytics, on the other hand, uses standardized, algorithm-driven scoring to process evidence at scale. However, it does require careful attention to data quality and transparency to avoid introducing new biases.

| Aspect | Traditional Methods | Predictive Analytics |

|---|---|---|

| Primary Decision-Maker | Expert panels and multi-stakeholder workshops | AI models with human oversight and validation |

| Typical Time per Assessment | Weeks to months of manual reviews | Hours to days; automated scanning and scoring |

| Evidence Coverage | Limited to what experts can manually review | Systematically analyzes millions of articles, reports, and data points |

| Consistency Across Projects | Varies by evaluator and panel composition | Standardized scoring with consistent criteria |

| Susceptibility to Human Bias | High (availability and optimism biases) | Lower; relies on objective feature extraction |

| Susceptibility to Data/Model Bias | Not applicable | Possible if trained on unbalanced data; requires monitoring |

| Auditability | Clear human rationale but inconsistent documentation | Transparent with explainable AI and proper documentation |

A hybrid approach that combines AI-generated recommendations with expert oversight often yields the best results. In this setup, AI models generate outputs with confidence scores and highlight supporting evidence, while experts review and adjust the findings as needed. This balance ensures accountability and allows experts to focus on higher-level strategic decisions. For U.S. health systems, federal agencies, and defense organizations managing a growing number of emerging technologies, this approach offers a practical way to enhance both speed and accuracy in TRL assessments.

Case Studies: Predictive Analytics in Deeptech Applications

Medical Software Development

In 2024, researchers at a leading academic center introduced the Clinical Artificial Intelligence Readiness Evaluator (CLAIRE) Lifecycle and Agent to streamline TRL-style assessments for clinical AI systems. This initiative brought together clinical informatics experts, data engineers, ethicists, and operational leaders to create a lifecycle model that integrates regulatory, ethical, and technical requirements. At its core, the CLAIRE framework uses an AI agent to automate evidence reviews and answer structured readiness questions. When tested on the synthetic "Diabetes Outcome Predictor", CLAIRE pinpointed previously noted evidence gaps, simplifying reviews and sharpening the focus of expert oversight. This approach significantly cut down the need for repeated, large-scale stakeholder meetings, allowing teams to spot documentation gaps earlier and speed up the readiness process for clinical AI software. This case highlights how AI-powered TRL assessments can shorten review cycles and concentrate expert efforts where they’re needed most[3].

Accelerating TRL in Renewable Energy Projects

Building on the success seen in clinical AI, predictive analytics is also transforming renewable energy initiatives. These tools are helping projects move more efficiently from laboratory validation stages (TRL 3–4) to operational demonstrations (TRL 6–7). The 2025 U.S. Technology Readiness Assessment Guidebook underscores the role of analytical models in validating performance, reliability, and cybersecurity within controlled settings before full-scale deployment[4]. Renewable energy developers now rely on performance forecasting models to predict key metrics like capacity factors, degradation rates, and potential failure modes. These insights provide the data necessary to justify advancing from lab-scale prototypes to pilot demonstrations. By combining TRLs with Technology Maturation Plans informed by simulation and predictive analytics, teams can zero in on critical uncertainties, reduce unnecessary rework, and ensure smoother TRL progression. These predictive models play a pivotal role in verifying essential milestones, aligning with broader efforts to refine and expedite TRL evaluations[4][7].

sbb-itb-5766a5d

Benefits for Deeptech Investors, Researchers, and Grantmakers

Reducing Investment Risks

AI tools play a pivotal role in helping investors and researchers minimize risks by analyzing historical data, patents, and prototypes to predict a technology's journey from early-stage development (TRL 1–3) to advanced stages (TRL 6–9). This approach makes it easier to identify projects with low maturity early on, preventing wasted resources. Traditional assessments often struggle with this, as over 70% of technologies stuck at TRL 1–3 fail to progress, underscoring the importance of early intervention [3] [4] [6].

By validating critical properties - like cybersecurity at TRL 3 - using predictive models, researchers can delay costly prototype builds until the technology is deemed ready. This can save up to $500,000 per project [3] [4]. In fields like clinical AI, these streamlined assessments have cut evaluation times significantly and flagged crucial evidence gaps, helping teams avoid regulatory setbacks.

In renewable energy, predictive models have shown an 80% likelihood of certain projects reaching TRL 7 within 18 months, offering grantmakers a clear path to prioritize high-potential initiatives [2] [4]. NASA and Department of Defense experts emphasize that predictive tools can reduce investor risks by as much as 40%, largely by identifying critical technology elements early and avoiding low-maturity pitfalls [4] [8]. Additionally, automated evidence analysis speeds up TRL evaluations by 30–50%, allowing researchers to shift their focus from tedious documentation reviews to actual R&D [3] [4]. These advancements in analytics are transforming how deeptech projects are evaluated and funded, providing measurable risk reduction.

Using Innovation Lens for Predictive TRL Insights

The ability to reduce risks highlights the importance of platforms like Innovation Lens, which uses predictive analytics to deliver actionable TRL insights. This tool processes millions of studies weekly, generating tailored reports that forecast the maturity of groundbreaking technologies. One of its standout features is identifying underexplored research areas with high potential but low competition, giving investors and grantmakers a chance to act early before these fields become saturated. Its predictive accuracy outperforms baseline models by ~4x for PubMed topics and ~2x for Physics and Computer Science [1].

For those managing research portfolios, Innovation Lens offers weekly updates on the technical maturity of various fields. Its dashboard provides clear visualizations, enabling users to filter technologies with a greater than 75% chance of reaching TRL 7 or higher. This approach mirrors the structured TRL assessments used in defense and aerospace sectors [4] [8]. By analyzing tens of millions of studies continuously, Innovation Lens not only reduces the manual workload of screening opportunities but also ensures consistent evaluations across teams. This combination of efficiency and precision makes it an essential tool for navigating the complex landscape of deeptech investments.

Challenges and Future Directions

Data Quality and Availability Issues

Predictive analytics for Technology Readiness Level (TRL) assessments faces tough hurdles, especially due to limited data availability in the early stages of research. According to the U.S. Department of Defense's 2025 Technology Readiness Assessment Guidebook:

TRAs depend on the quality and availability of credible data [4].

At TRL levels 1–3, where research is in its infancy and applications are still speculative, the evidence base is often weak. Studies highlight consistent gaps in early-stage data, making it difficult for AI models to perform effectively in such sparse environments. The unstructured and varied nature of early research data further complicates the reliability of supervised models. This is where methods like standardization and retrieval-augmented strategies become critical [7][8].

Improving data quality can involve standardizing how experiments are documented and supplementing internal data with external sources [3][5]. However, the Department of Defense stresses that assigning higher TRLs requires a robust "body of data or accepted theory", which remains a key challenge for predictive models [4]. These limitations highlight the pressing need for evolving methodologies in predictive analytics.

Future Trends in Predictive Analytics

Overcoming these data challenges is essential to tapping into emerging trends in the field. Predictive analytics is shifting toward continuous, lifecycle-based readiness tracking. Frameworks like MLTRL are paving the way by incorporating checkpoints for data management, reproducibility, and monitoring, enabling real-time tracking of AI maturity in deeptech applications [6].

Advanced AI models are becoming better equipped to handle sparse data, particularly through retrieval-augmented strategies [3][6]. For example, platforms like Innovation Lens use curated reports and predictive analytics to create "future abstracts", which highlight transformative projects before they become mainstream [1].

Combining expert insights with algorithmic predictions represents a promising path forward, especially in scenarios where public data is limited. This hybrid approach could provide deeper and more actionable TRL insights for decision-makers.

Conclusion

Predictive analytics is reshaping how TRL assessments are conducted for deeptech stakeholders. Instead of relying on periodic expert reviews, this approach enables continuous, data-driven evaluations that speed up innovation. By reducing inconsistencies and shortening review timelines, AI-driven methods pave the way for quicker deployment of groundbreaking technologies, whether it's advancements in medical AI or renewable energy solutions [3].

The advantages ripple throughout the deeptech ecosystem. Investors gain early insights into a technology's maturity, helping them differentiate between early-stage concepts and near-deployment projects. Researchers benefit from aligning their work with standardized maturity frameworks like MLTRL, improving their chances of securing funding and regulatory approvals. Grantmakers can make better-informed decisions, focusing on promising yet underexplored areas by relying on global research trends rather than subjective opinions. Platforms that merge predictive analytics with domain expertise amplify these benefits, providing a comprehensive toolset for stakeholders.

A great example of this evolution is Innovation Lens. By combining predictive analytics with in-depth reports, the platform helps stakeholders spot promising projects early. Its ability to create "future abstracts" for emerging research areas aligns seamlessly with predictive TRL assessments, offering a glimpse into which technologies are likely to progress the fastest [1].

These advancements, supported by guidelines from the U.S. Department of Defense, are turning TRL assessments into strategic tools. The 2025 guidebook underscores the importance of TRAs in balancing cost, schedule, and technical risks [4]. Predictive analytics enhances this process, shifting TRL assessments from mere compliance tasks to critical decision-making tools. By integrating AI models, expert knowledge, and standardized frameworks, this approach offers a more precise, efficient, and actionable way to evaluate technology maturity across the deeptech landscape.

FAQs

How does predictive analytics help eliminate bias in assessing Technology Readiness Levels (TRL)?

Predictive analytics plays a key role in making TRL assessments more impartial by leaning on data-driven models and algorithms. This approach helps cut down on the sway of personal opinions, leading to a more objective evaluation of how mature a technology truly is.

By examining historical data and spotting trends, these predictive tools deliver consistent and dependable insights. This not only boosts the accuracy and efficiency of TRL assessments but also reduces the risk of human error, encouraging fair and balanced decision-making.

What are the main challenges of applying predictive analytics to early-stage Technology Readiness Level (TRL) evaluations?

Using predictive analytics to assess early-stage Technology Readiness Levels (TRLs) isn't without its hurdles. A key challenge lies in the scarcity and quality of data available for emerging technologies. Early-stage projects typically don’t have robust datasets, making it tough to draw reliable conclusions. On top of that, the complexity of these technologies, combined with a lack of historical benchmarks for comparison, makes accurate modeling a tricky task.

Another complication is the potential for biases in predictive models. When the underlying data reflects incomplete or skewed trends, the resulting assessments can be unreliable. These issues collectively make it difficult to ensure consistent and precise evaluations for technologies still in their developmental stages.

How do AI models assess a technology's readiness level?

AI models evaluate how ready a technology is by looking at several key factors: its technical maturity, performance data, development milestones, and validation results. By leveraging predictive analytics, these models dig into historical data and ongoing progress to spot trends and predict the technology's current readiness level.

This method simplifies the evaluation process, providing a clearer and more efficient way to determine a technology's stage in its development path.